Pengfei ZhuI am a fourth-year PhD student at the NJU Meta Graphics & 3D Vision Lab, part of the Computer Science and Technology at Nanjing University, supervised by Prof. Yanwen Guo and Prof. Jie Guo. Prior to that, I received my B.Eng. degree in 2022 from the School of Information and Software Engineering at University of Electronic Science and Technology of China. In 2025, I worked as a research intern at Alibaba Group, focusing on diffusion-based material reconstruction. From 2021 to 2022, I served as a graphics algorithm intern at SenseTime, mainly engaged in autonomous driving data generation toolchains. Email: pfzhu [AT] smail.nju.edu.cn |

|

ResearchMy research focuses on material reconstruction and generation. |

|

MatMart: Material Reconstruction of 3D Objects via DiffusionXiuchao Wu*, Pengfei Zhu*, Jiangjing Lyu‡, Xinguo Liu, Jie Guo, Yanwen Guo, Weiwei Xu, Chengfei Lyu† IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025 paper / MatMart adopts a two-stage reconstruction, starting with accurate material prediction from inputs and followed by prior-guided material generation for unobserved views, yielding high-fidelity results. MatMart achieves both material prediction and generation capabilities through end-to-end optimization of a single diffusion model, without relying on additional pre-trained models, thereby exhibiting enhanced stability across various types of objects. |

|

StableIntrinsic: Detail-preserving One-step Diffusion Model for Multi-view Material EstimationXiuchao Wu*, Pengfei Zhu*, Jiangjing Lyu‡, Xinguo Liu, Jie Guo, Yanwen Guo, Weiwei Xu, Chengfei Lyu† arXiv, 2025 paper / To address the overly-smoothing problem in one-step diffusion, StableIntrinsic applies losses in pixel space, with each loss designed based on the properties of the material. Additionally, StableIntrinsic introduces a Detail Injection Network (DIN) to eliminate the detail loss caused by VAE encoding, while further enhancing the sharpness of material prediction results. |

|

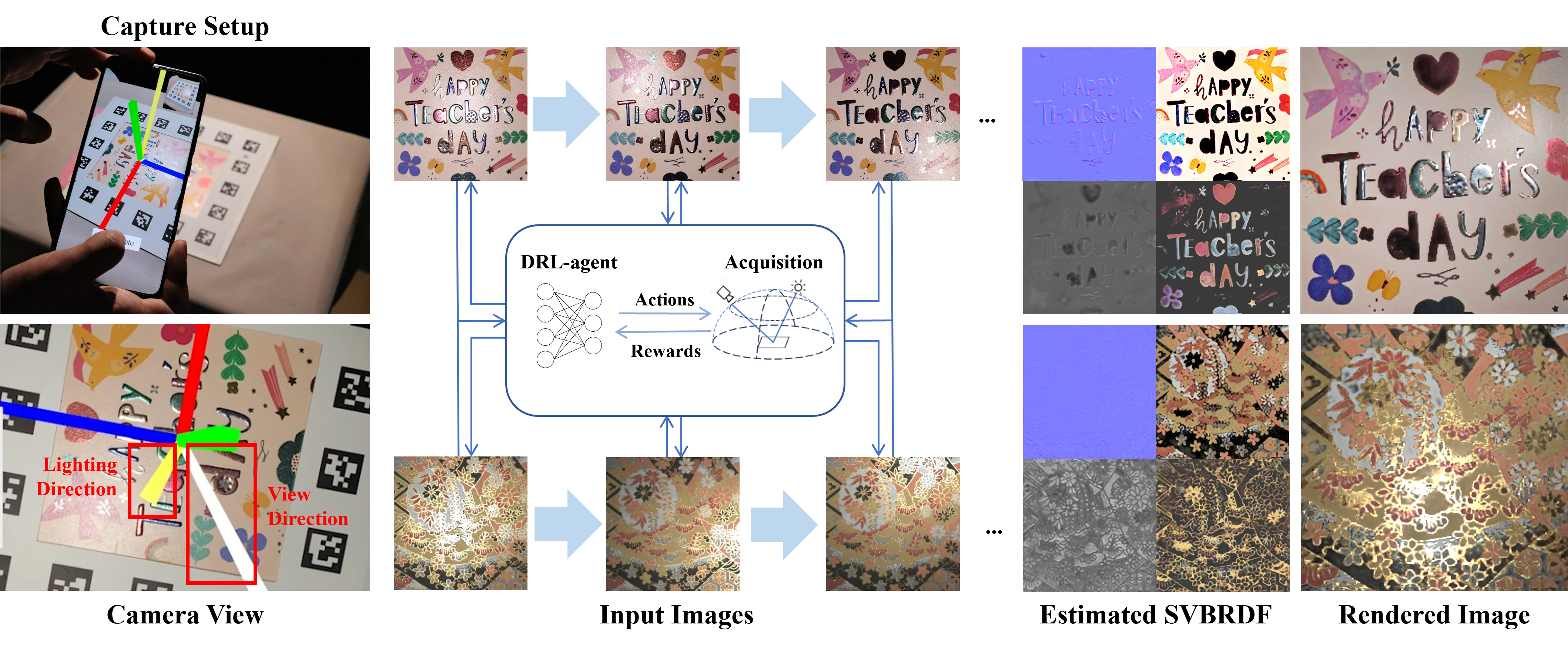

Appearance-aware Multi-view SVBRDF Reconstruction via Deep Reinforcement LearningPengfei zhu, Jie Guo†, Yifan Liu, Qi Sun, Yanxiang Wang, Keheng Xu, Ligang Liu, Yanwen Guo† SIGGRAPH Conference Papers, 2025 paper / To determine the best sampling set for each material while ensuring minimal capture costs, we introduce an appearance-aware adaptive sampling method in this paper. We model the sampling process as a sequential decision-making problem, and employ a deep reinforcement learning (DRL) framework to solve it. |

|

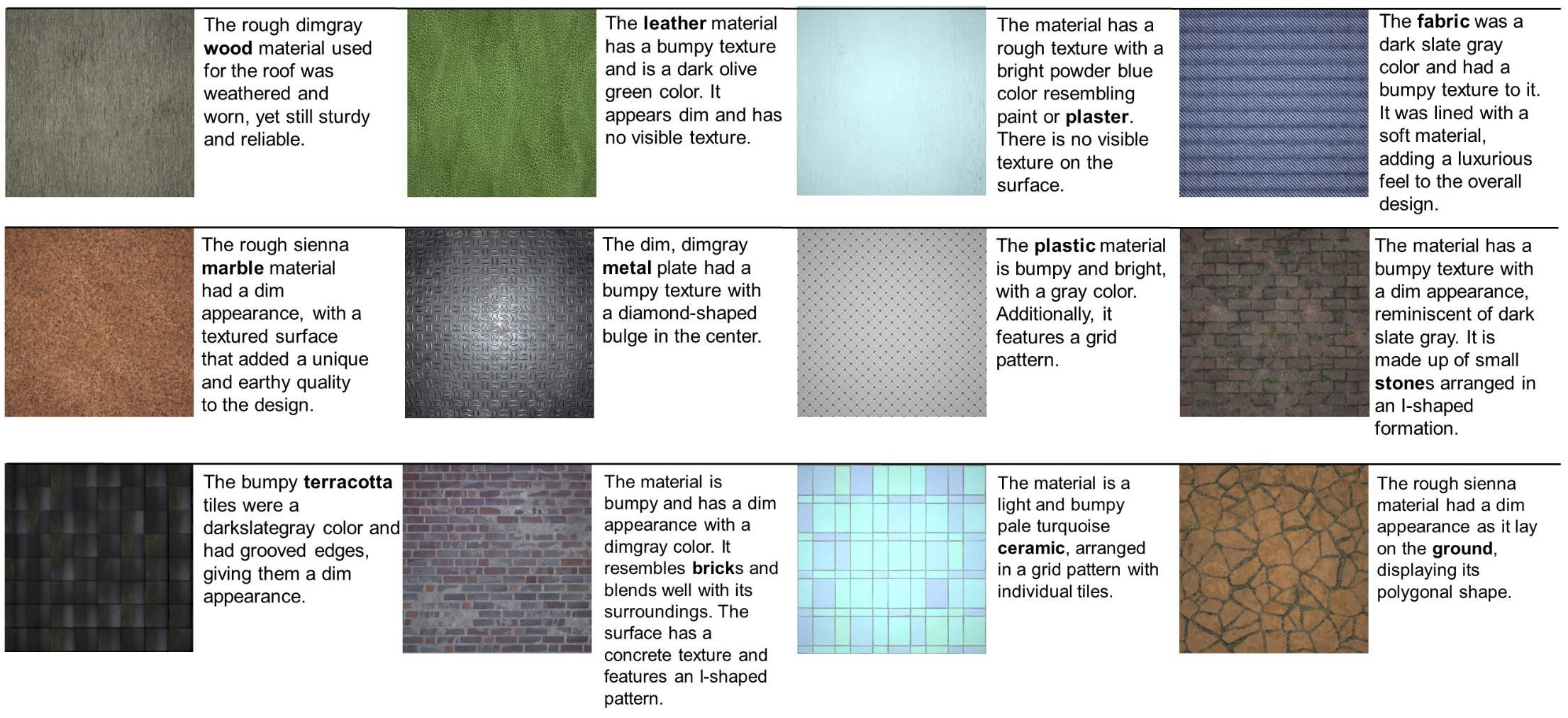

DTDMat: A Comprehensive SVBRDF Dataset with Detailed Text DescriptionsMufan Chen, Yanxiang Wang, Detao Hu, Pengfei zhu, Jie Guo†, Yanwen Guo Proceedings of the 19th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry, 2025 paper / We design an automatic annotation tool to generate full descriptions to solve material datasets lacking essential text information challenge. This tool can extract six aspect tags from BRDF maps: intrinsic type, texture, color, roughness, lightness, and other relevant attributes. |

|

SVBRDF Reconstruction by Transferring Lighting KnowledgePengfei zhu, Shuichang Lai, Mufan Chen, Jie Guo†, Yifan Li, Yanwen Guo† Computer Graphics forum, 2023 paper / We introduce a novel method to reconstruct SVBRDF parameters from multiple images without the need for calibration. |

|

Design and source code from Jon Barron's website and Leonid Keselman's Jekyll fork. |